Testing Strategies

When developing software it’s important to carry out proper testing.

There are several different strategies that you can use,

- Static analysis - use software tools to read your code and look for potential problems

- Dynamic analysis - using run time software tools to find memory and threading problems

- Test units - make smaller programs that isolate a single function of the main program, feed it ground truth data and test against the expected result

- White box testing - try to do things with the software that you know will be challenging or will exercise different aspects of the internal structures

- Black box testing - throw any input data at the software without considering how it is designed to respond

- Code review - sit down and read through the code, making sure it is consistent, looking for mistakes

- Dog fooding - use your own software in the way it is intended and note down problems

- Fuzzing - create random input, throw it at the software and detect when the software crashed or didn’t produce a result

At Tiliam, we’re using all these strategies except for fuzzing. It’s not a perfect system though,

- Static analysis can produce a large amount of false positives that distract from higher priority errors

- Dynamic analysis can be slow and produce many messages caused by system software not under your control

- Test units can become irrelevant or broken if not maintained

- White box/black box/code review can create problems when the expected result differs from the correct result because complex software leads to confusion about what the expected result should be

- Code review can be very slow

- Dog fooding can result in workflows that use very specific paths through the software that are highly tested and less used functions don’t get as much testing

The first step in fixing a problem is to know it exists. This is only half the problem though; we’ve only talked about testing the program and the user interface. What about the problem of using suitable test video ? Ideally we need video in all the formats for all possible scenarios.

In the case of MotionBend that means having a good range of camera motions. Fake shaky footage tends to look fake and is not as good a test as real shaky video. Fake shake is nowhere near as random as real shake. For the high quality video formats, people usually don’t want to strap their expensive, ultra high resolution cameras and expensive lenses to the side of a car or bicycle or be fixing things in post. Nonetheless, we have a large number of videos that we use for testing and hope that they are representative of what people are using the software for.

Recently we’ve been doing some work on an interesting side project - porting MotionBend to Linux. Most of the internals of MotionBend are not platform specific so it only took a few days to get a build running. Apple open sourced Grand Central Dispatch so we were able to make use of that, in fact it’s a standard package in Debian Linux.

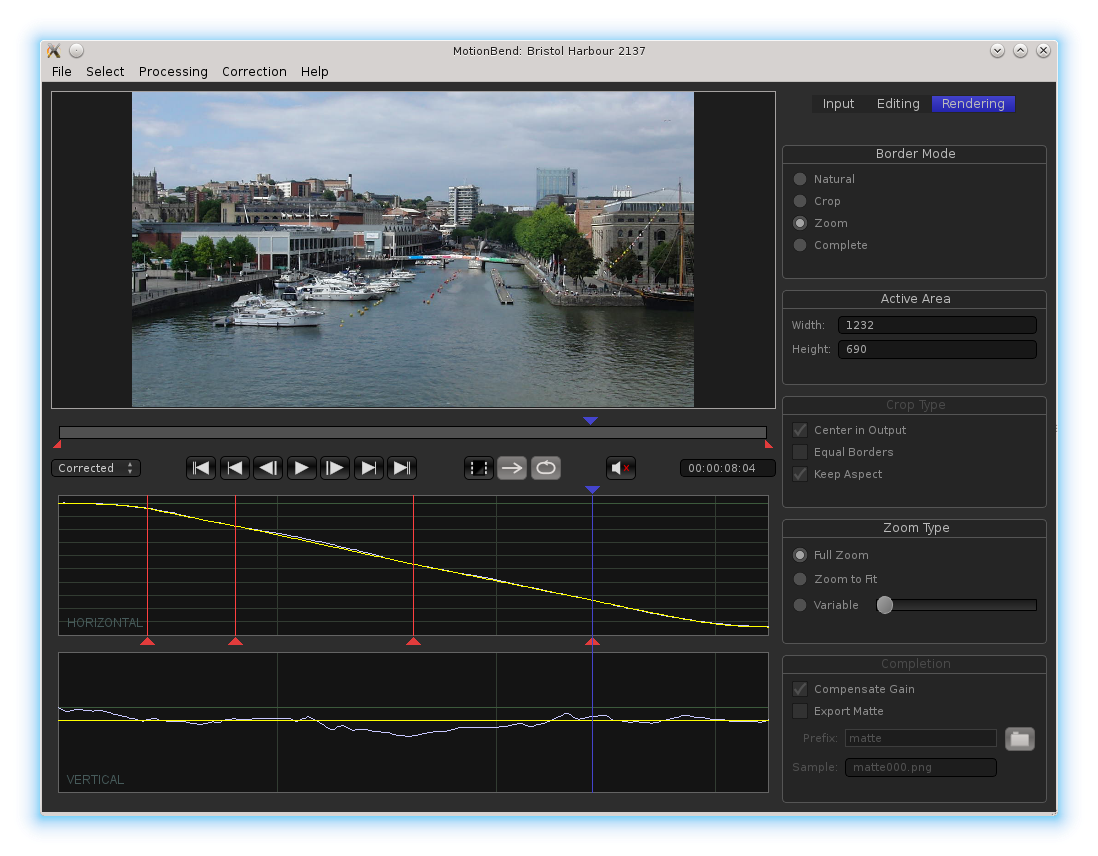

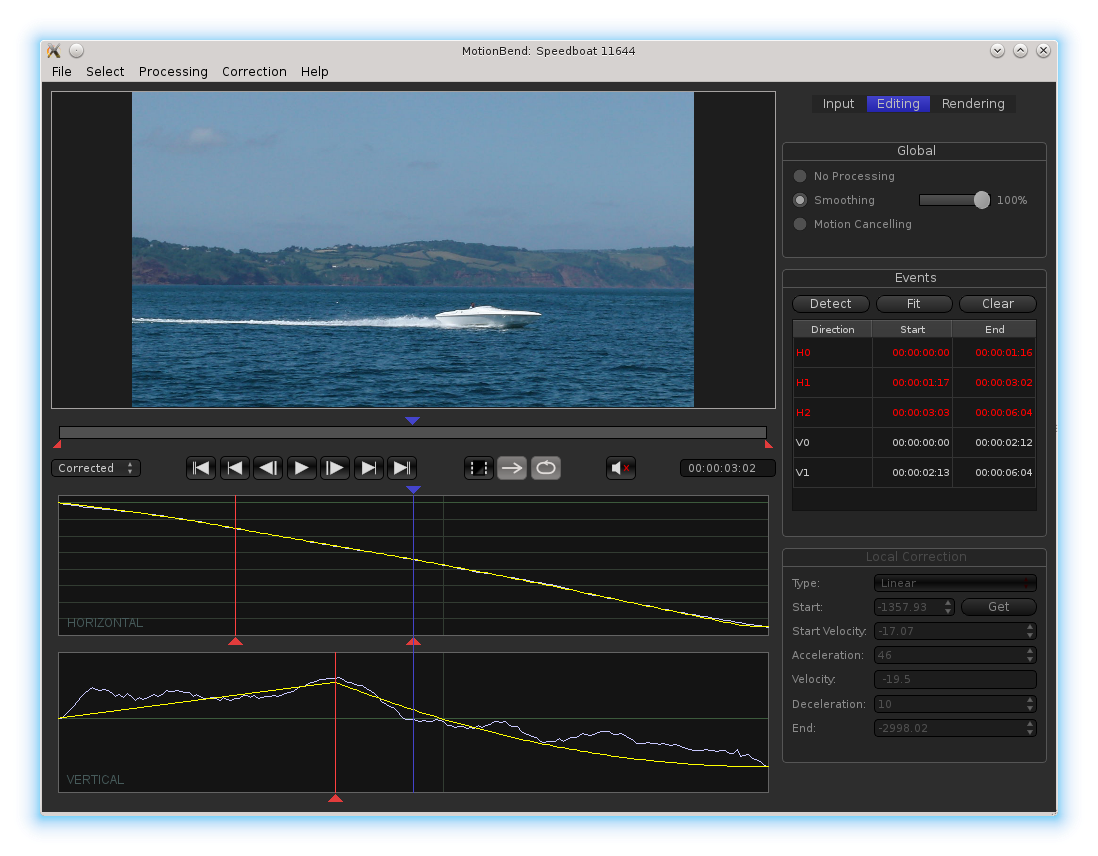

The key area for future development is access to more video formats, since we don’t have the QuickTime framework any more. Building and using MotionBend on Linux opens up the possibility of using Linux software tools for additional testing and also scheduling more automated testing. As you can see from the screenshots below, it’s not finished yet.

Linux MotionBend Screenshot

Linux MotionBend Screenshot